Summary

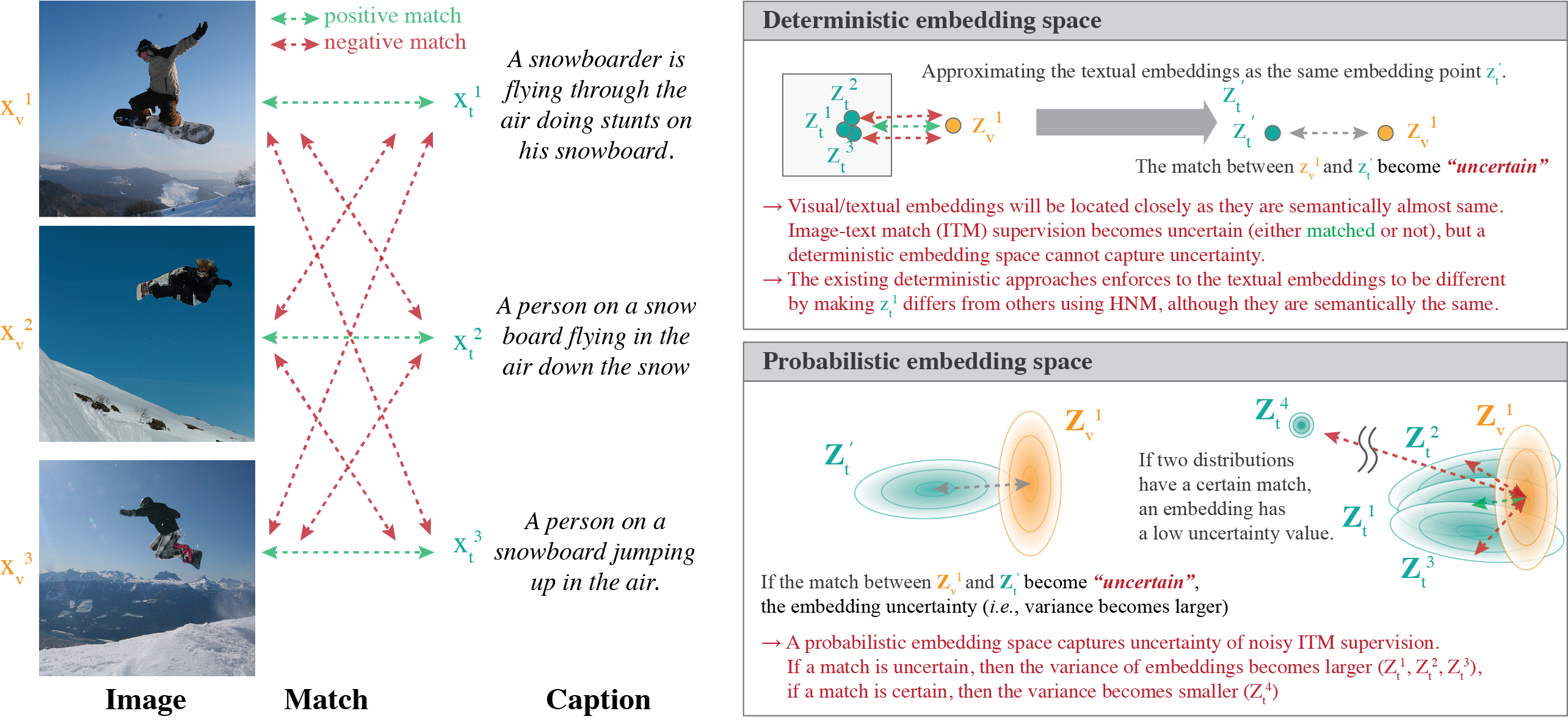

- Image-Text Matching (ITM) suffers from the inherent ambiguity caused by multiplicity and imperfect annotations of ITM datasets (See figure).

- Probabilistic embeddings can capture the ambiguity of the ITM datasets, but the existing method (Chun, et al. 2021) suffers from expensive computations and loss saturation under abudant false negatives.

- PCME++ is an improved probabilistic image-text representation, by introducing: a new closed-form probability distance, named CSD, and a new matching objective function based on CSD for substituting expensive sampling-based approximation of PCME.

- There are also two additional techniques for PCME++: Pseudo-positive matches and mixed sample data augmentation for probabilistic match. These techniques addresses the loss saturation issue of abundant false negatives

- Experimental results on MS-COCO, CxC and ECCV Caption show the effectiveness of PCME++. Additional experimental results show that the learned uncertainty is helpful for understanding dataset ambiguity. Furthermore, this paper investigates the potential of PCME++ for automatic prompt engineering using the learned text uncertainty.

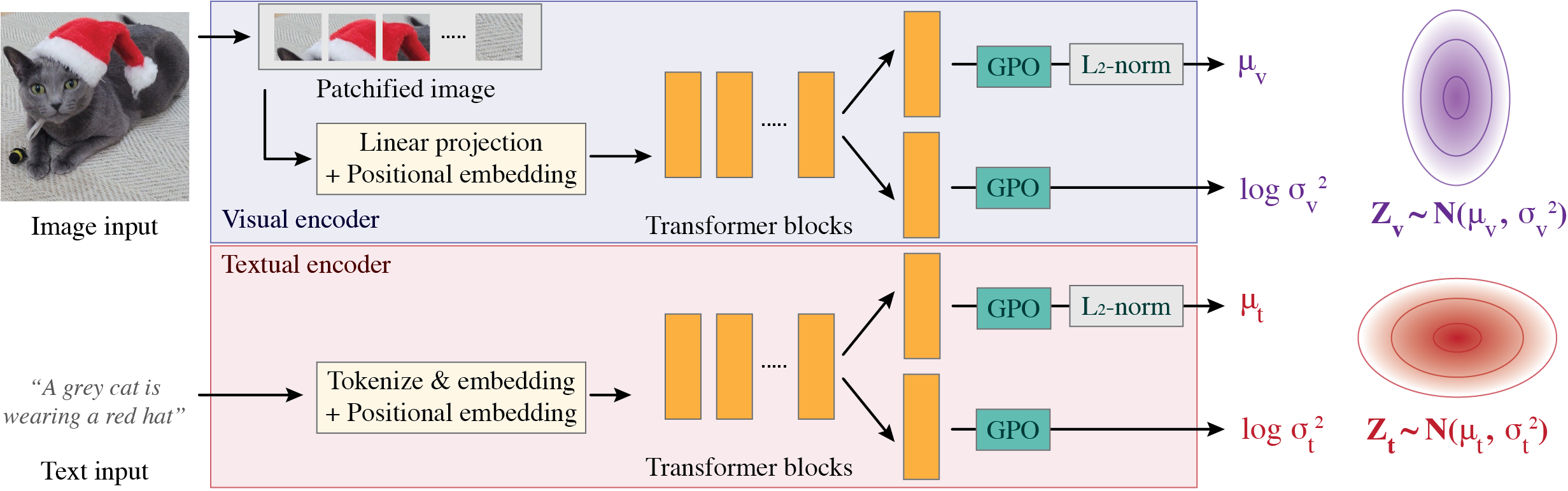

Architecture

2D Toy example

Below annimations show the effectiveness of the proposed closed-form sampled distance (CSD) compared to Wasserstein distance. Details of the toy dataset is described in the paper. Here, red, yellow and green are "certain" classes, i.e., they should have small uncertainties (smaller radius in the annimation), while the others are "uncertain" samples between two classes. For example, during the training, the samples belonging to the brown class is observed as either red or green randomly. It makes the ambiguity of the toy dataset, and our purpose is to design a method that captures the inherent ambiguity of the dataset. Note that the state-of-the-art ITM methods since VSE++ use a triplet loss with "hardest negative mining (HNM)". However, the following annimations show that the negative mining strategy will lead to an unintended embedding space when the dataset is ambiguous. Meanwhile, when there is no negative mining, the method will be failed to distinguish certain and uncertain examples.

Triplet loss with hardest negative mining

Triplet loss without negative mining

How about probabilistic approaches? The following annimations show that PCME++ with the proposed CSD will capture the inherent ambiguity of the dataset, i.e., the certain samples have low uncertainty (small radius) and the uncertain samples have high uncertainty (large radius) while the embedding space successfully separates the classes. On the other hand, we can observe that PCME++ with Wasserstein distance is failed to capture the uncertainty of the dataset, i.e., all samples have similar uncertainty. It shows that the proposed CSD is a proper uncertainty-aware probabilistic distance, while Wasserstein distance is not. More detailed discussion can be found in the paper.

PCME++ with the proposed distance (CSD)

PCME++ with Wasserstein distance (WD)

The full experimental results

The full experimental results, including error bars, can be found in this spreadsheet.

Citation

@inproceedings{chun2023pcmepp,

title={Improved Probabilistic Image-Text Representations},

author={Chun, Sanghyuk},

year={2024},

booktitle={International Conference on Learning Representations (ICLR)},

}